Observing System Design (OSD)

Capability Working Group

Facilitating the design of a comprehensive and multi-disciplinary observing system for the Southern Ocean

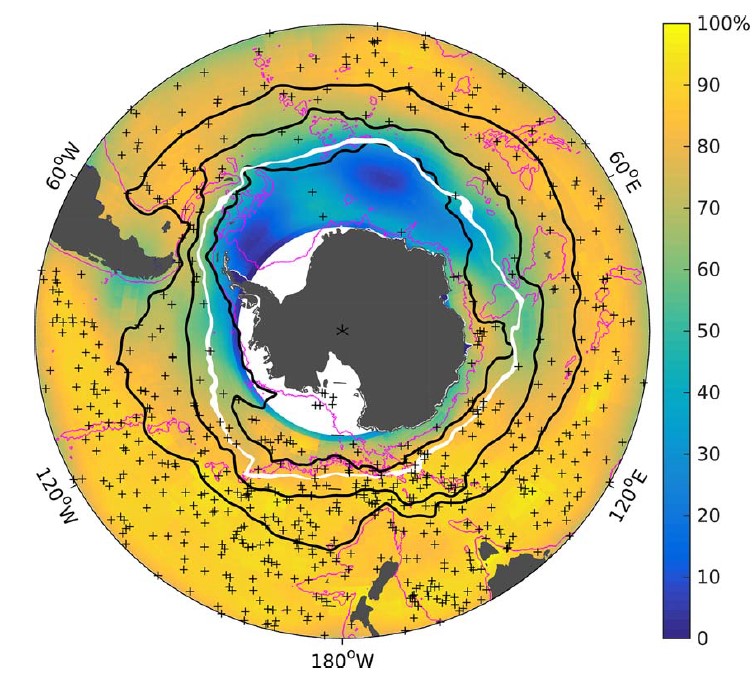

The Observing System Design (OSD) Capability Working Group developed from the need to quantify the minimal amount of data required to address specific quantities or variables of interest. This work supports efforts to gain greatest scientific benefit from limited resources. The working group aims to analyze methods and provide guidance for optimizing observational strategies and seeks to enhance collaboration and instigate discussions on this important topic.

In the two years of operation, the SOOS OSD Capability Working Group notably provided quantified observational targets for the AniBOS programme; modeled the optimal placement of moorings to constrain large-scale fluxes of heat in the Southern Ocean (Wei et al., 2020 globe-asia); and held a scientific workshop focusing on bridging the Southern Ocean modelling and observational communities.

The full terms of references for this working group can be found here.

-

To facilitate the design of a comprehensive and multi disciplinary observing system for the Southern Ocean

-

To advise methods for assessing observing system design for given quantities of interest.